Terraform

AKS & SQL Integration w/ Terraform & Dockerized NGINX Deployment

Project URL: https://github.com/bgcodehub/profisee-code

1. Introduction

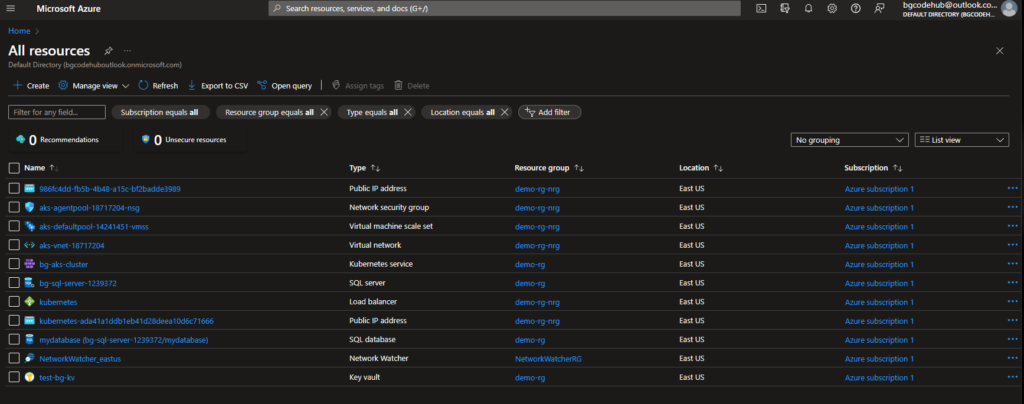

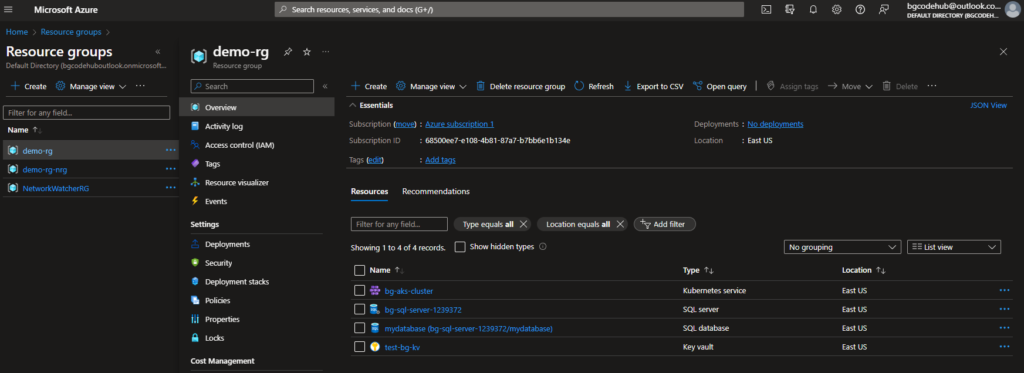

- Building on the pervious AKS cluster setup, in Level 3 I aimed to integrate it with Azure SQL Database and deploy an NGINX app from Docker Hub to the AKS cluster. Here are the nuances and significant additions made to the implementation.

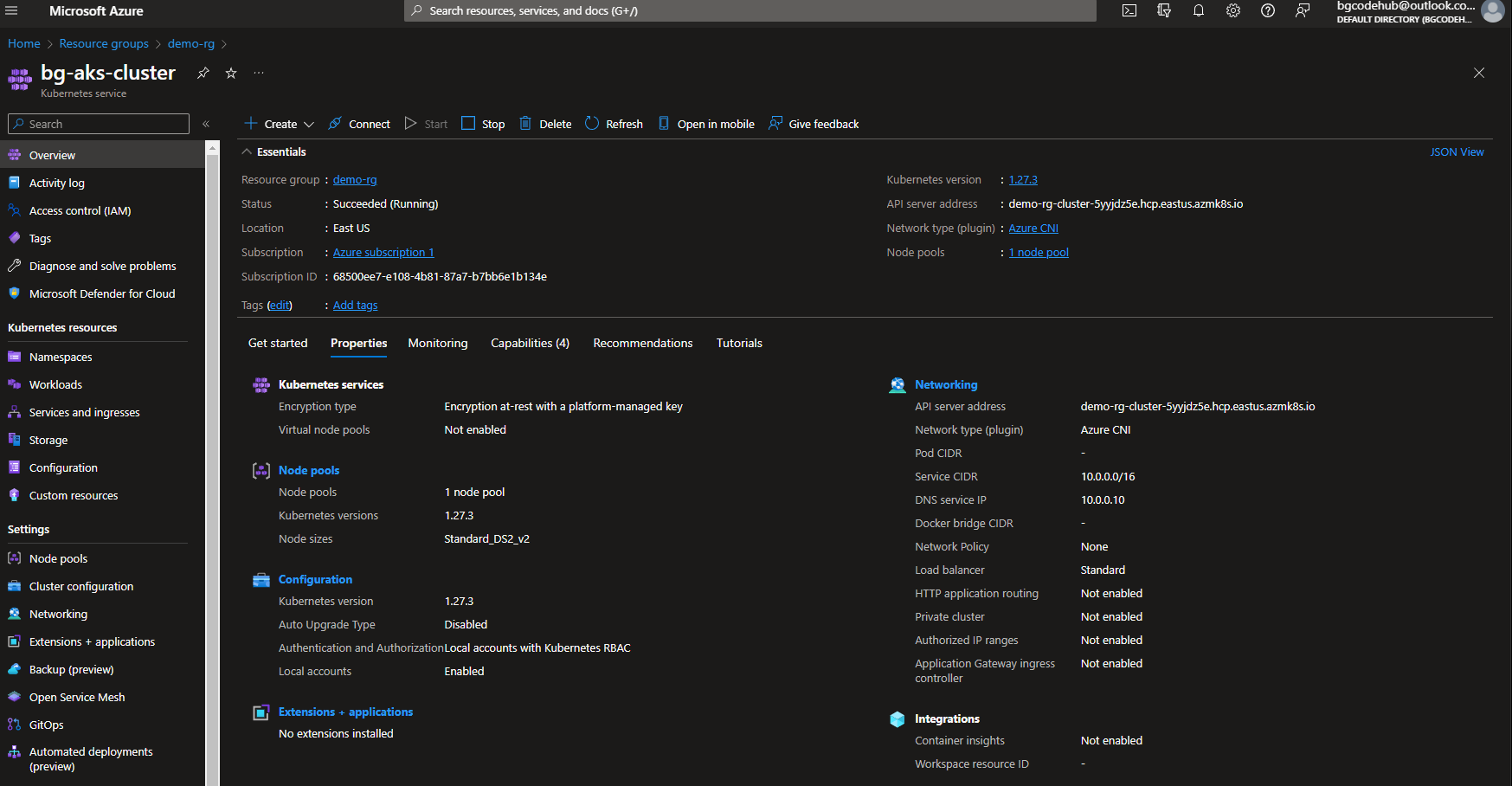

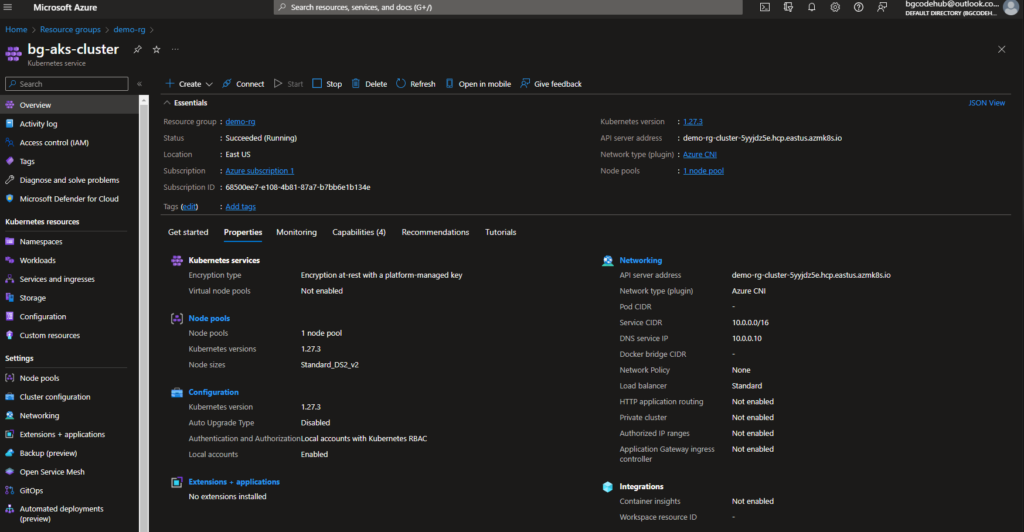

2. AKS Configuration

- Azure Provider & Kubernetes Provider:

- While I continue to use the ‘azurerm’ provider for Azure resources, I’ve introduced the ‘kubernetes’ provider to work directly with the AKS cluster.

- AKS Cluster:

- I continue the AKS cluster setup as discussed in Level 2. Notably, the ‘~/.kube/config’ file allows Terraform to communicate with the AKS cluster. I’ve specified the context as “bg-aks-cluster”.

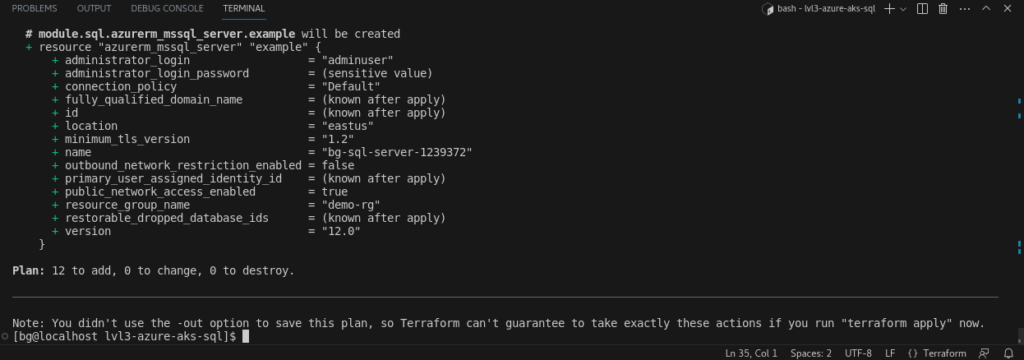

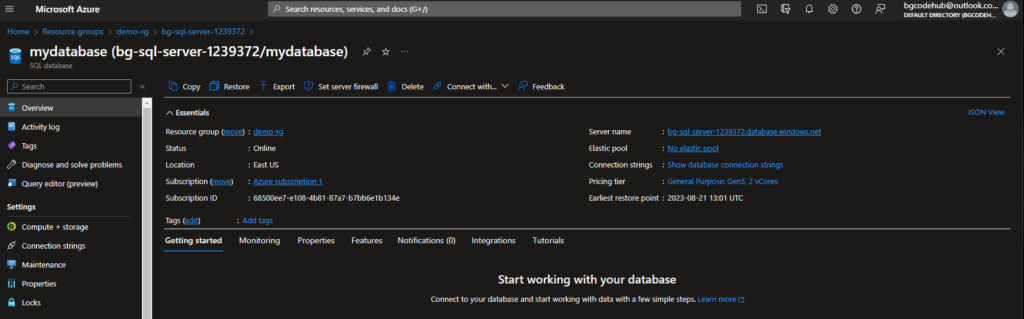

3. Azure SQL Database Integration

- I’ve deployed both an Azure SQL Server and its associated database using the ‘sql’ module.

- Parameters:

- ‘server_name’: Name of the SQL server instance.

- ‘database_name’: Name of the database to be created.

- ‘administrator_login’ and ‘administrator_login_password’: Credentials for the SQL server administrator.

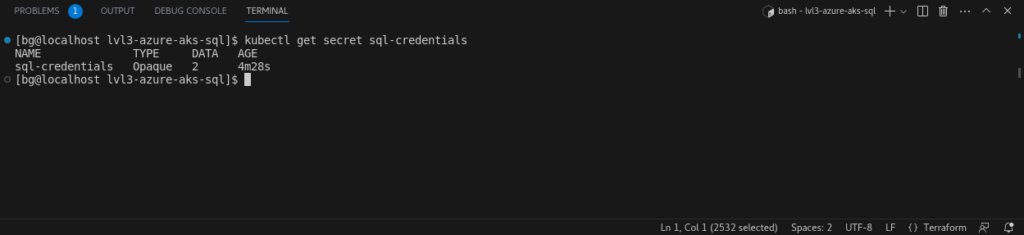

- Kubernetes Secret for SQL Credentials:

- To securely store the SQL credentials, a Kubernetes secret named ‘sql-credentials’ is created. This secret can be referred to by the Kubernetes-based applications to securely access the SQL server without exposing the credentials in plaintext or in the app’s source code.

- ‘Opaque’ type means that the secret data is arbitrary and the Kubernetes API doesn’t interpret or use it.

4. Dockerized NGINX Application:

- HTML Content:

- A simple “Hello World from NGINX!” is displayed in the ‘index.html’.

- Dockerfile:

- I’m using the latest version of the official NGINX Docker image.

- The ‘index.html’ is copied into ‘/usr/share/nginx/html/’, the default directory from which NGINX servers static content.

- Resultant Docker image is tagged as ‘bgcodehub/my-hello-world-nginx’ and pushed to Docker Hub.

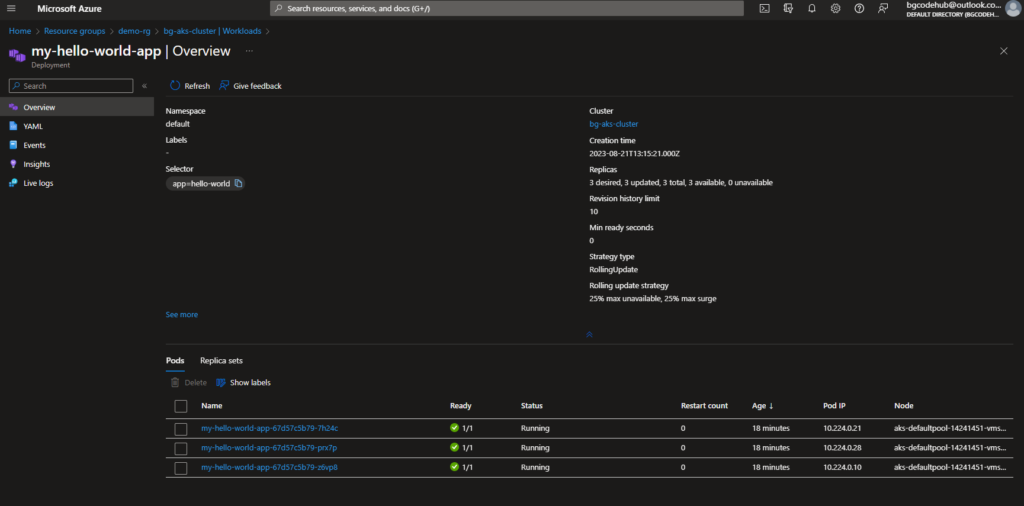

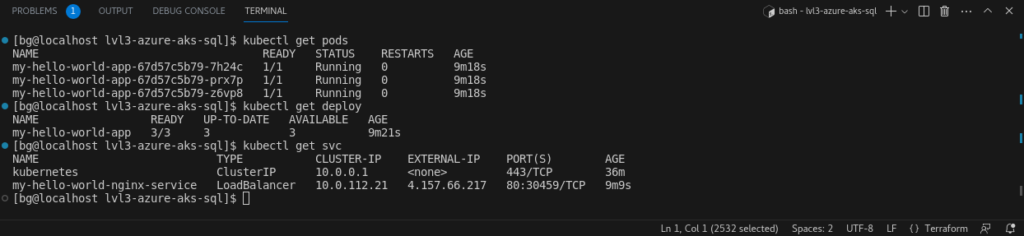

5. Kubernetes Deployment of Dockerized NGINX

- Deployment (deployment.yaml):

- Metadata:

- The deployment is named ‘my-hello-world-app’.

- Specifications:

- I’m deploying three replicas of the application for availability and load distribution.

- The Docker image ‘bgcodehub/my-hello-world-nginx’ is pulled from Docker Hub.

- Environment variables ‘SQL_USERNAME’ and ‘SQL_PASSWORD’ are set for the container from the Kubernetes secret ‘sql-credentials’. This design means the containerized app can access the SQL credentials securely, and they’re not hard-coded or exposed in the app source.

- Service (service.yaml):

- Metadata:

- The service is named ‘my-hello-world-nginx-service’.

- Specifications:

- The service directs traffic to pods labeled with ‘app: hello-world’.

- It listens on port 80 (standard HTTP port) and directs the traffic to the target port 80 on the pods.

- The ‘LoadBalancer’ type ensures that the service is exposed externally. Azure will provision an external IP address, making the application accessible over the internet.

- Metadata:

- Metadata:

6. Discuss Implementation Choices

- Docker with NGINX: NGINX was chosen due to its performance, reliability, and scalability. Containerizing with Docker ensures consistent deployments across any environment.

- Environment Variables from Secrets: Instead of hardcoding sensitive details, Kubernetes secrets were employed to provide environment variables to the deployment. This approach ensures a secure way of handling sensitive data like SQL credentials.

- LoadBalancer Service: To expose the application to the internet, a LoadBalancer service type was chosen. This integrates with Azure’s underlying infrastructure to provision an external IP and manage traffic distribution.

7. Potential Improvements

- Database Integration: Though I pass SQL credentials to the NGINX container, the current implementation doesn’t use these credentials. In a real-world scenario, integrating an application layer that utilizes these credentials would be crucial.

- Horizontal Pod Autoscaling: While I have three replicas for availability, implementing Horizontal Pod Autoscaler (HPA) could further optimize resources based on the demand.

- Use of Helm: Helm could be employed in the future to package and manage the Kubernetes application, streamlining deployments and rollbacks.

8. Key Takeaways

- In this Level 3 setup, I’ve achieved:

- Containerization: With Docker, I’ve containerized the web app, ensuring consistency in deployment regardless of the environment.

- Kubernetes Orchestration: Using Kubernetes, I’ve automated the deployment and scaling of the app on the AKS cluster.

- Secret Management: Kubernetes secrets manage sensitive information, allowing the app to securely access SQL credentials without hardcoding them.

- Public Accessibility: Through a Kubernetes LoadBalancer service, I’ve made the app accessible over the web.

- This whole setup ensures an end-to-end automated, scalable, and secure deployment of a web application in Azure using Terraform, Docker, and Kubernetes.

9. Q&A

- Why use NGINX and not another web server or application?

- NGINX is known for its high performance, small footprint, and flexibility. For demonstration purposes, it offers a straightforward way to showcase a containerized web application. However, the methodology used here can be applied to other applications or web servers just as efficiently.

- Ensuring the database is highly available and fault-tolerant?

- In Azure, I’d utilize Azure SQL Database with geo-replication and failover groups. This ensures that even if a primary database in one region faces issues, traffic can be redirected to a secondary replica in another region.

- Considerations if this were a production-grade application with high traffic?

- For high traffic, I would consider implementing Azure Front Door or Azure CDN to cache content at the edge and reduce the load on the AKS cluster. Additionally, I’d ensure that monitoring and logging with Azure Monitor and Log Analytics are in place. Proper resource scaling, both for AKS and the SQL database, would be crucial.

- Handling sensitive data other than SQL credentials, like API keys or other secrets?

- I’d continue to utilize Kubernetes secrets and, for an added layer of security, integrate with Azure Key Vault to store and manage sensitive information.